Previously I wrote a blog about how to measure hamster via IoT wheel. This reminds me another personal project I did back to the winter of 2018/2019, for measuring car performance.

Previously I wrote a blog about how to measure hamster via IoT wheel. This reminds me another personal project I did back to the winter of 2018/2019, for measuring car performance.

We recently welcomed our new family member Qiuqiu (球球) (a girl Syrian/Golden hamster) home. She seems to enjoy the new environment fairly well, but she is a quiet girl - does not show much activities during the day time.

Of course we understand hamsters are nocturnal animals, which means they are sleeping in day time and become more active at night. But I started wondering how she was doing during the nights, especially how much she ran on the hamster wheel.

Let’s do something about it.

Picture: Qiuqiu with her wheel

Recently we need to fetch a big dataset from an API via powershell, then import to Azure Data Explorer (ADX).

1 | #Used Measure-Command for measuring performance |

The data.json file looks perfectly fine, but during import to ADX, it reported error “invalid json format”.

Using online validation tool such as https://jsonlint.com/, copy & paste the content from data.json. The json objects are valid.

Using local tool jsonlint, reports error. It shows the data.json file has encoding issue.

1 | PS C:\Users\lufeng\Desktop> jsonlint .\data.json |

Switch to a different powershell command solved the problem

1 | Invoke-WebRequest -Uri 'THE_API_END_POINT' -OutFile data.json |

EOF

Recently our QA reported an interesting issue regarding the native app and our website: When the webpage was shared on Linkedin iOS App and/or Facebook iOS App, the built-in browsers cannot show it correctly but a blank page.

Here we will setup a single-node Kubernetes cluster on a windows 10 PC (In my case it is a surface 5 with 16GB RAM). If you are new to docker, feel free to check out Jump-start with docker.

We are going to setup:

(edited 10.06.2020: Updated how to get User ID as LinkedIn upgraded their endpoints)

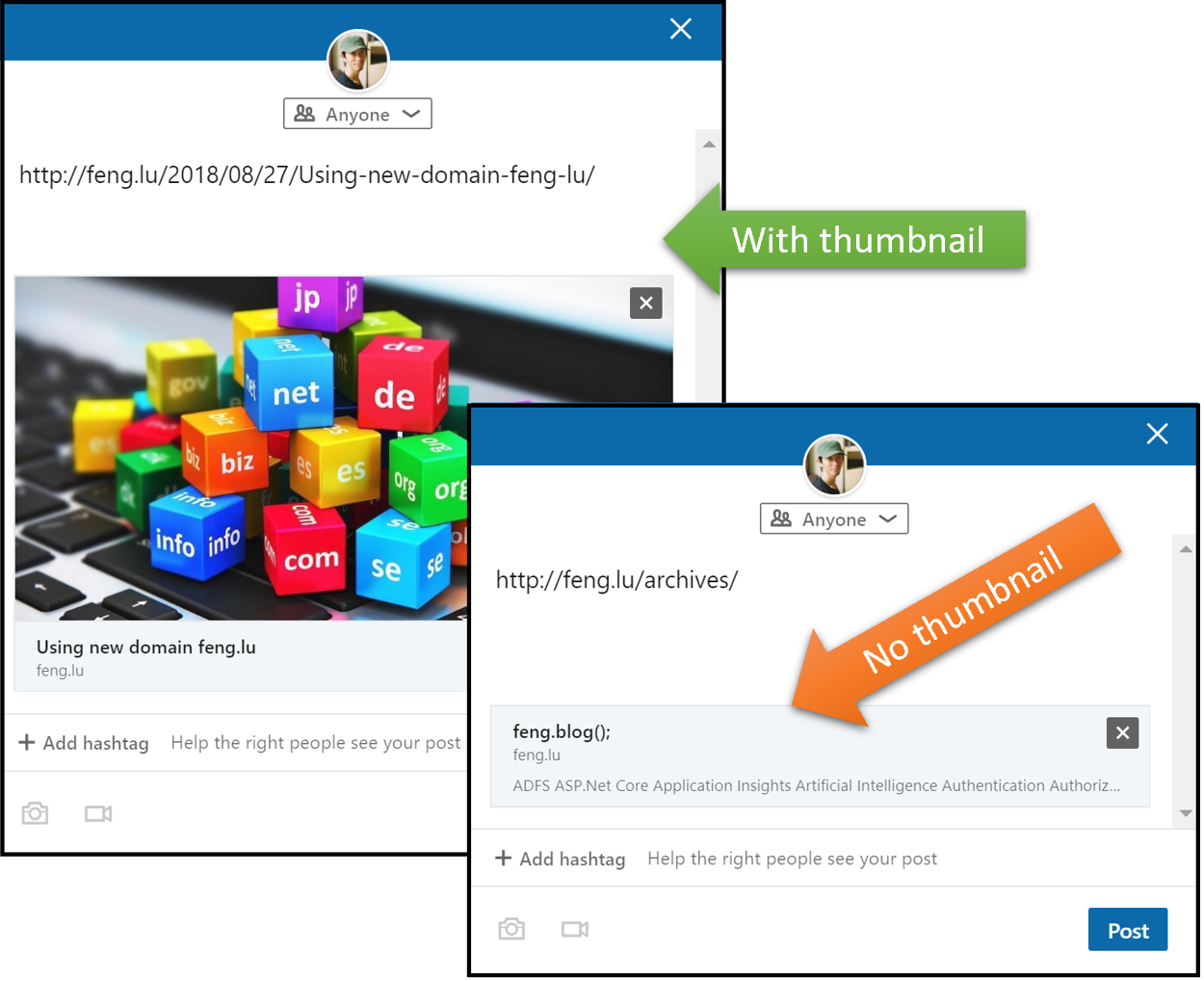

Nowadays it is pretty common to share articles on social media such as Facebook and Linkedin. Thanks to the widely implemented Open Graph protocol, sharing is no long just a dry url, but with enrich text and thumbnails.

However, there are still some web pages that do not have Open Graph implemented, which significantly reduces the readers’ willingness for clicking it.

In addition, even you introduced the Open Graph tags as a hotfix, some times you will have wait for approximately 7 days for linkedin crawler to refresh the preview caching, as mentioned in linkedin documentation:

The first time that LinkedIn’s crawlers visit a webpage when asked to share content via a URL, the data it finds (Open Graph values or our own analysis) will be cached for a period of approximately 7 days.

This means that if you subsequently change the article’s description, upload a new image, fix a typo in the title, etc., you will not see the change represented during any subsequent attempts to share the page until the cache has expired and the crawler is forced to revisit the page to retrieve fresh content.

Some solutions are here and here, but they are more like a workaround.

We can overcome this issue by using linkedin API, which provide huge flexibility for customizing the sharing experiences.

Other articles in this series:

In the second part of this series, we have went though both the detailed technical design that is based on IOTA. Some quick recap are:

Although the core data schema is quite easy to implement, companies and developers might meet some challenges to get started, such as:

We want to address above challenges, and help everyone to gain benefits of data integrity and lineage. Therefore, we have built “Data Lineage Service“ application. Developers and companies can apply this technology without deep understanding of IOTA and MAM protocol. It can be used either as a standalone application, or a microservice that integrates with existing systems.

The key functions are:

Other articles in this series:

In my previous article, we discussed different approaches for solving the data integrity and lineage challenges, and concluded that the “Hashing with DLT“ solution is the direction we will move forward. In this article, we will have deep dive into it. Please not that Veracity’s work on data integrity and data lineage is testing many technologies in parallel. We utilise and test proven centralized technologies as well as new distributed ledger technologies like Tangle and Blockchain. This article series uses the IOTA Tangle as the distributed ledger technology. The use cases described can be solved with other technologies. This article does not necessarily reflect the technologies used in Veracity production environments.

As Veracity is part of an Open Industry Ecosystem we have focused our data integrity and data lineage work using public DLT and open sourced technologies. We believe that to succeed with providing transparency from the user to the origin of data many technology vendors must collaborate around common standards and technologies. The organizational setup and philosophies for some of the public distributed ledgers provides the right environment to learn and develop fast with an adaptive ecosystem.

Other articles in this series:

With the proliferation of data – collecting and storing it, sharing it, mining it for gains – a basic question goes unanswered: is this data even good? The quality of data is of utmost concern because you can’t do meaningful analysis on data which you can’t trust. Here in Veracity, we are trying to address this is very concern. This is a 3 part series, going all the way from concept to a working implementation using DLT (Distributed Ledger Technology).

Side note, Veracity is designed to help companies unlock, qualify, combine and prepare data for analytics and benchmarking. It helps data providers to easily onboard data to the platform, and enable data consumers to access and mine value. The data can be from various sources, such as sensors and edge devices, production systems, historical databases and human inputs. Data is generated, transferred, processed and stored, from one system to another system, one company to another company.

Veracity is by DNV GL, and DNV GL has held a strong brand for more than 150 years as being a trusted 3rd party, yet it is still pretty common to hear questions from data consumers such as:

1. Can I trust the data I got from Veracity?

2. How was the data collected and processed?

Shortly after I have renewed my blog domain fenglu.me, it just crossed my mind that “hey, is it possible to register a top-level domain with my family name .lu? So I can literally have my name for my site: feng.lu! That will be cool!”

(picture copyright: www.dreamhost.com)

And, (after googling), yes! It is possible! .lu is the Internet country code top-level domain for Luxembourg. OK… (continue googling) “Can I register a .lu domain without been a Luxembourgers?” “No problem!” Great!

Long story short, after some quick research on vendors and paid 24 Euro, I got the brand new feng.lu domain! :)

The remaining is pretty straightforward:

Happy blogging!