Other articles in this series:

Introduction

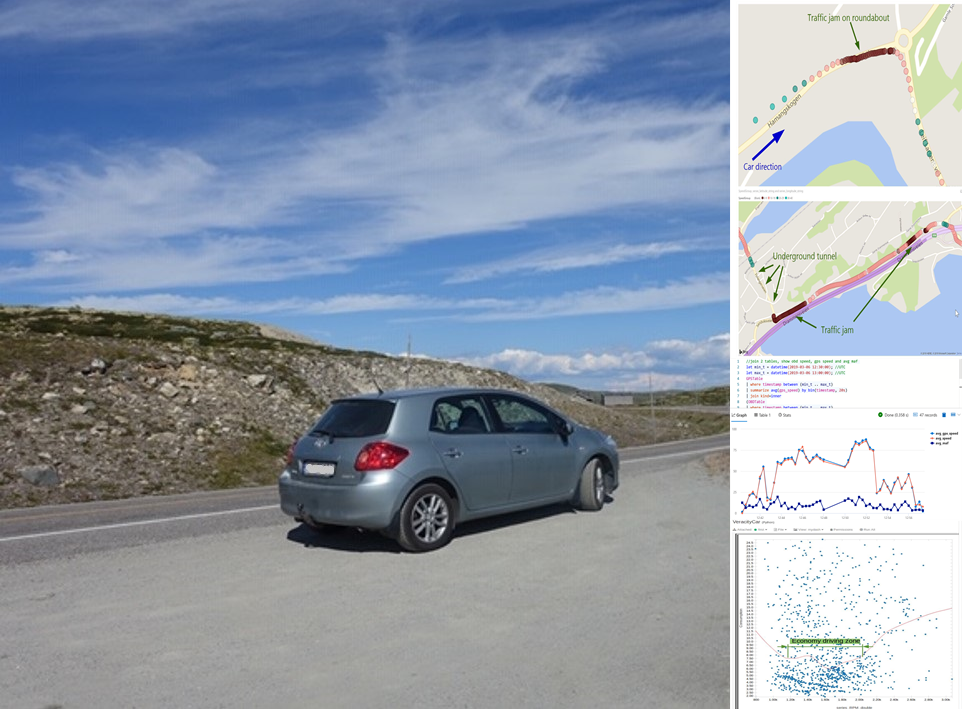

With the proliferation of data – collecting and storing it, sharing it, mining it for gains – a basic question goes unanswered: is this data even good? The quality of data is of utmost concern because you can’t do meaningful analysis on data which you can’t trust. Here in Veracity, we are trying to address this is very concern. This is a 3 part series, going all the way from concept to a working implementation using DLT (Distributed Ledger Technology).

Side note, Veracity is designed to help companies unlock, qualify, combine and prepare data for analytics and benchmarking. It helps data providers to easily onboard data to the platform, and enable data consumers to access and mine value. The data can be from various sources, such as sensors and edge devices, production systems, historical databases and human inputs. Data is generated, transferred, processed and stored, from one system to another system, one company to another company.

Veracity is by DNV GL, and DNV GL has held a strong brand for more than 150 years as being a trusted 3rd party, yet it is still pretty common to hear questions from data consumers such as:

1. Can I trust the data I got from Veracity?

2. How was the data collected and processed?

Read More